The crisis in social media: where are we headed?

Social media is at a point of crisis. Facebook and Twitter have been increasingly losing out to toxicity and violence. The Twitter founder Evan Williams expressed regret for having founded it. Mark Zuckerberg had apologized for Facebook's failure to tackle fake news, after denying responsibility outright initially. Meanwhile, Fake News and rumour mills are churning out stories by the minute. By serving them hot and fresh, fuelling hatred and polarising opinions, a generation has come to accept obnoxiousness as a way of life and one-sided opinions and rants as facts.

Twitter, the problem child of social media, has since its advent been the rogue satellite gone awry- aimlessly sputtering venom as it paced fast forward into our digital space. Having putrefied the entire online space, it is perishing in its own negativity.

Facebook, on the other hand had been the better cousin, appearing to be trying to contain damage by a self-imposed moderation policy even if it is for its own business reasons. But an investigation done by The Guardian last week revealed several damning instances of how it is at sea in using moderation to contain the monstrous toxicity occasionally unleashed on its platform.

Most users are fed up with the trolling and bullying which has become almost second nature to Social Media. Many a victim has been left heartbroken and traumatised after being torn apart by faceless mobs. And then lives have been taken online, and live shootings and suicides have been broadcast live for the whole world to see. The US Presidential elections has proven to be a tipping point. It has been mostly downhill for the social media companies since then in terms of content.

Broken Internet: The castle without a door

“There’s a lock on our office door and our homes at night. The internet was started without the expectation that we’d have to do that online,” Evan Williams, Twitter co-founder and its single largest shareholder, recently said to David Streitfeld of The New York Times.

The high degree of openness which the earlier online populace exhibited towards ideas and their methods of intellectual forbearance for opposing viewpoints have today given way to crassness, asininity and vulgarity. The world wasn’t ready for the level of freedom of speech which these platforms helped put in place. In such a scenario questioning the very need for such freedom appears reasonable.

The Elections: The recent American presidential elections were a reflection of the Open Internet having changed for the worse. The fake news it fuelled forced Twitter and Facebook to come out and openly apologize for their failure with regards to fake news, trolls and online bullying after varying rounds of self-justifying introspection.

Live Murders: The swelling number of headlines featured the world over about the misuse of social media for broadcasting violence being inflicted on others, suicides, child abuse, etc., and the number of people who actively view them, liking and encouraging the behavior, reveals a new dimension about the pleasure users have started to take in morbid gratification.

More moderation: After several incidents of shootings and suicides were streamed on Facebook Live, Facebook announced the hiring of 3,000 moderators or compliance officers to monitor the feature for murders and suicides. This raised Facebook's global operations team reviewing flagged content to 7,500. So how successful have the moderators been in reducing such incidents?

Facebook’s troubles regarding content increased exponentially once it launched the Facebook Live facility for all. Moderation seemed to be not working as accepted. There were numerous instances when Live-streamed videos of violence and suicides remained online for hours and days inspite of being red-flagged. Nothing was known about how and based on what Facebook took decisions regarding content. Some clarity on this front has now become available owing to the Guardian’s investigation based on materials used for training of moderators in Philippines. And it is by no means paints a rosy picture.

The Guardian: The Facebook Files

The Guardian published a series of articles based on its access to Facebook’s till now secret, rules and guidelines manuals meant both to train and later guide its moderators in deciding what its 2 billion users can post on the site, what content should be removed and what should not be when reported.

Based on more than 100 internal training manuals, spreadsheets and flowcharts that give unprecedented insight into the blueprints Facebook has used to moderate issues such as violence, hate speech, terrorism, pornography, racism and self-harm, many of the advocated stands of Facebook in these materials appear to be questionable.

A close perusal of the revelations suggests that while most of the guidelines appear random, subjective and at times flimsy, others were downright insensitive. The moderators reportedly take decisions based on 10 seconds worth of time devoted per each reported content. Given the voluminous nature of reported and flagged content, it is thus not surprising that live suicides, and murders stay online for much longer periods inspite of being reported by users. The moderators are stretched too thin.

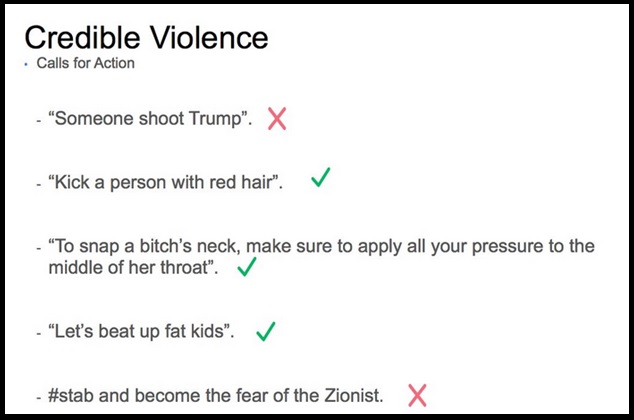

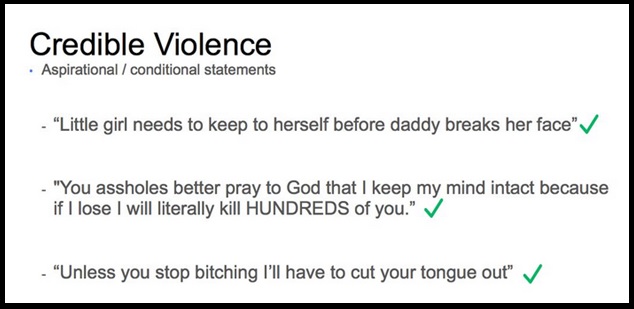

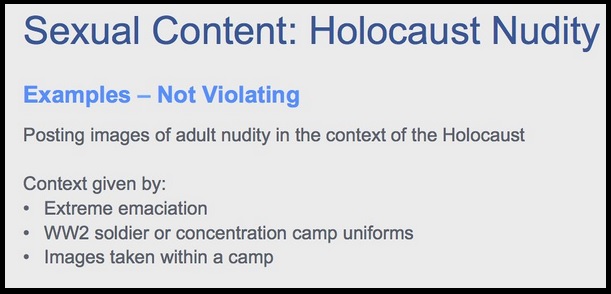

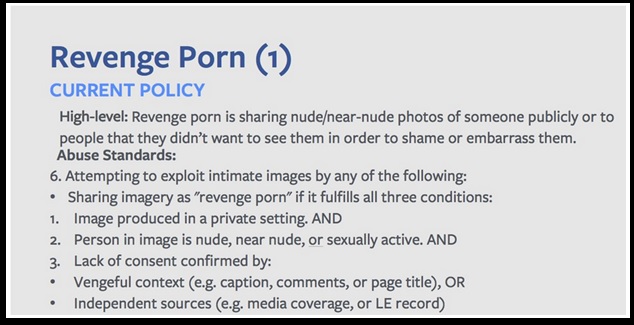

The Guardian’s Facebook Files make public Facebook’s guidelines on moderating revenge porn, graphic violence, racism, nudity and much more. As has been revealed, what stands out is the inconsistency and peculiar nature of some of the policies. Those on sexual content, for example, appear complex and confusing. Many appear less thought out. Moderators have also raised concerns about the unclear guidelines. Some of which are reproduced below. Some forms of threat are considered acceptable while some aren’t. Some forms of racism is acceptable while others aren’t. At times it is not the content of the video or image which makes it removable but the tone and tenor of captions. There are inconsistencies galore in the material as obtained by The Guardian.

The hypocrisy

The biggest takeaway from this leak was that it took a leak for Facebook’s moderating policies to become public. The underlying reason behind fiercely protecting public disclosure of how they moderated content appears to be to hide the fact that the emperor was skimpily clad.

While Facebook and its evil twin Twitter have continued doing all that the traditional news media does, including making revenue, they have for long argued that they are technological platforms. Unlike traditional media they have kept themselves out of content regulation. A pittance of a moderation policy has been held up as proof of their intentions to help its users.

Twitter’s Moderation Policy, owing to its free speech doctrine had always left its users to fend for themselves. The leaked Facebook Files reveal how Facebook’s moderation policy too seems to be amateurishly flawed at places.

To begin with, being American companies, their policies lean heavily towards the American ideals of absolute freedom of speech. As argued by Emily Bell this is why--“Legally, Facebook is not obliged to moderate what appears on its platform at all. In fact, it didn’t have any policy or moderation team looking at content until 2009.”

As reasoned by Bell, “In The New Governors: The People, Rules and Processes Governing Online Speech,” legal scholar Kate Klonick describes how, despite technology platforms being protected from publishing liability by Section 230 of the Communications Decency Act in the US, many, including Facebook, built internal moderation systems to help protect their businesses.”

The protection from publishing liability might also explain Twitter’s ‘can’t give a rat’s ass’ attitude towards victims of abuse for a very long time. But for how long can Facebook and Twitter, the predominant social media platforms continue with impunity? The situation in Germany points to a slow turn-around in the narrative.

Germany : The law finally catching up?

Amidst the growing clamour for better control and regulation of online content, Europe is leading the way. Germany is trying to become first country to subject Social Media platforms to its own laws, and it may well pave the way forward for other countries to follow suit.

“There can be just as little space in the social networks as on the street for crimes and slander,” German FederalJustice and Consumer Protection Minister, Heiko Maas is reported to have said.

“The biggest problem” according to Maas “is that the networks do not take the complaints of their own users seriously enough.” While this perfectly sums up what every victim of online abuse has been crying out for years, the law seems to be catching up.

Heiko Maas is at the heart of the German efforts to seal up online space and leave no room for crimes and slander, meant to be enforced through the passage of a new law. The law aims to empower the German authorities to impose massive fines for failure of the social media platforms in taking down content flagged off as inappropriate. Behind this efforts are assurances given with regard to removal of hate speech by the tech firms themselves.

Way back in December 2015, Facebook, Twitter and Google for Youtube had given assurance to the German government that they would remove criminal hate speech content from their respective platforms within 24 hours. In May 2016, Facebook, Twitter, Google and Microsoft agreed with the European Commission on a code of conduct that also committed them to removing hate speech within 24 hours.

A German government-funded study, carried out by Jugendschutz, monitoring the performance of the companies, found that Facebook had become worse at promptly handling user complaints. The company deleted or blocked 39 percent of the criminal content reported by users. In addition, only one-third of content reported by Facebook users was deleted within 24 hours of the complaint being made. Twitter’s performance was even worse. The survey found that only one out of a hundred user messages was removed, and none of the deletions took place within 24 hours. This was in contrast with Google, which made significant improvements regarding YouTube content complaints. The study found that 90 per cent of user-reported content was deleted from the platform, and 82 percent of the deletions occurred 24 hours after the notification.

As per the newly proposed law named as Network Enforcement Law in Germany, “Social networks that do not create an effective complaint management system capable of deleting criminal contents effectively and swiftly could be punished via fines of up to €5 million euros against the individual person responsible for dealing with the complaint, and larger fines of up to €50 million against the company itself. Fines also could be imposed if the company does not comply fully with its reporting obligation.” The proposed law also would require social networks to appoint a “responsible contact person” in Germany who could be served in criminal proceedings and civil proceedings — and would themselves be on the hook for a fine of up to €5 million.”

What needs to be seen is if this law eventually comes into force and how it would open the doors to many more of such laws being framed world over, ushering in a new age of accountability for both the moderators as well as social media companies. This could well be the beginning of the end of social media as we know it today, and probably for the better. After all, what is not acceptable on the streets should not be acceptable online as well.