Twitter’s latest crackdown on abuse is a damp squib

Twitter announces crackdown on abuse with new filter and tighter rules, reported The Guardian on 21 April 2015. For a company whose CEO had publicly repented its shortcomings in handling the evil trolls which cluttered its services with vitriol, it could have been a moment of reckoning. It should have been the time to show the world that they really did mean what they said. Alas! That was not to be.

Twitter had always been climbing the wrong tree even after identifying the ills that plague its platform--Trolls and trolling. It had after all, since its inception stuck to its argument that it will not censor content as it believes in free speech. A position it has stubbornly tried to hold on to, to this date.

All that Twitter did back in 2015 was to introduce a few filters which would automatically prevent the users from seeing threatening messages. This was later followed up with options to mute accounts too. Nothing of help for the many, by then mentally drained, victims of abuse.

Which is what makes this week’s latest announcement all the more interesting. Ed Ho (@mrdonut) the VP of Engineering at Twitter, announced on February 7th 2017, the newest of announcements about Twitter’s Safety concerns and measures undertaken to make it safer.

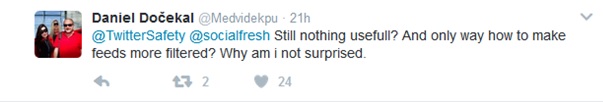

So what has changed in the two years since Twitter’s chief executive acknowledged that the company “sucks at dealing with abuse and trolls on the platform, and we’ve sucked at it for years”?

The latest announcement included three changes: stopping the creation of new abusive accounts, bringing forward safer search results, and collapsing potentially abusive or low-quality Tweets.

1. Stopping the creation of new abusive accounts:

The vagueness as to how this was going to be achieved, would make even the great “Humphrey” of “Yes Minister” proud. Apparently Twitter is “taking steps to identify people who have been permanently suspended and stop them from creating new accounts. This focuses more effectively on some of the most prevalent and damaging forms of behavior, particularly accounts that are created only to abuse and harass others.”

How is such identification going to stop creation of new accounts? The new accounts as my humble mind tells me are yet to be created. Is this the same old story of the ip address based blocking? If so what is Twitter going to do with ip address conflicts, proxies and the like? Or is it going to be based on the use of phone numbers which these suspended users are presently required to punch in for revoking suspension? There seems to be no clarity. Hopefully something specific will be unveiled about this vague sounding measure in the coming days.

The rest of the updates are déjà vu. It seems Twitter can’t do much about the existing trolls even now other than hide them. Considering how difficult it is to get Twitter to suspend/delete accounts, this is disappointing.

2. Introducing safer search results:

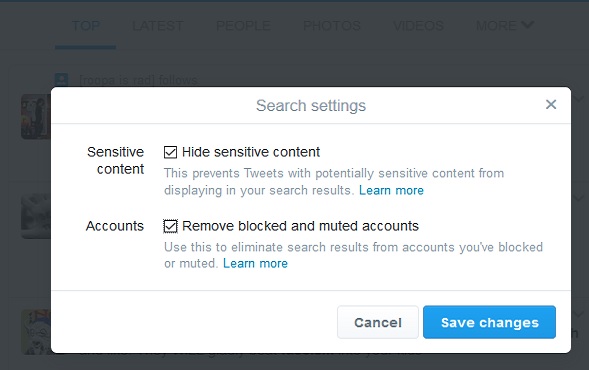

Twitter, it was announced, was also working on ‘safe search’ which removes Tweets that contain potentially sensitive content and Tweets from blocked and muted accounts from search results. While this type of content will be discoverable if you want to find it, it won’t clutter search results any longer.

This is not different from Twitter’s earlier approach of putting a blanket over the potential victim to soften the punch while arming the abuser to the hilt. Safe search is certainly not intended to stop abuse and harassment to begin with. It only aims to make it look like it has disappeared once a user enables the safe search after logging in. Safe search only eliminates search results from accounts one has blocked or muted or which contains sensitive content.

3. Collapsing potentially abusive or low-quality Tweets:

The last safety feature from Twitter is the identification and collapsing of “potentially abusive and low-quality replies so the most relevant conversations are brought forward. These Tweet replies will still be accessible to those who seek them out.”

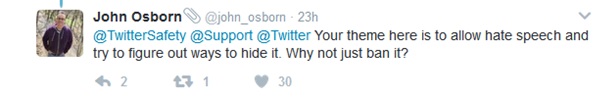

This last measure from Twitter follows the same old philosophy that the victims of abuse on Twitter are actually weaklings who do not have the heart to face criticism and public flak. It is to soothe those babies that Twitter is rolling out these features. As pointed out by this user.

What to make out of it?

Irrespective of the year of update or the flowery concern for victims of harassment and abuse, Twitter’s policy has never been pointed in the right direction. While it is the trolls who abuse and harass, Twitter has always been concerned about how to stop people from seeing things which would make them report. It expects its users to feel safe by adopting its suggested measure of hiding their heads in the sand while Twitter should have been preventing the abusers from going scot free.

The new features doesn’t have anything against fake accounts such as these either, which is in direct violation of Twitter policies on many counts.

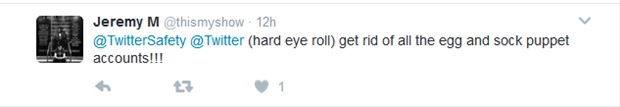

Egg and sockpuppet accounts is another problem with Twitter. A sockpuppet is an online identity used for purposes of deception. The term now includes other misleading uses of online identities, such as those created to praise, defend or support a person or organization, to manipulate public opinion, or to circumvent a suspension or ban from a website. “The egg”, is the default avatar that Twitter gives to every new account. Many accounts, automated or not, never care to put up a picture.

There have been many suggestions such as automuting of unknown accounts or account deanonymization to counter egg and sockpuppet accounts, yet Twitter remains oblivious. Numerous egg and sockpuppet accounts are allowed to proliferate, propagate and spew hatred for whatever reasons, inspite of the repeated cry for deletion of such accounts.

Twitter’s response to all this has been “We will let you filter them out” or as this user put it aptly;

There is something fundamentally flawed in Twitter’s approach to the whole issue of Trolls and online abuse. Even when someone reports an account which abuses repeatedly, unless its someone famous, no action is ever taken.

Going by what the latest update reveals, it could be a long time before Twitter takes effective penalising action to regulate the behaviour of its rude users. It needs to start by taking the reporting by users far more seriously and responding to them even when the user is not a celebrity who threatens to quit Twitter.